Experts have claimed that popular AI image generators such as Stable Diffusion are not so adept at picking up on gender and cultural biases when using machine learning algorithms to create art.

Many text-to-art generators allow you to input phrases and draft up a unique image on the other end. However, these generators can often be based on stereotypical biases, which can affect how machine learning models manufacture images Images can often be Westernized, or show favor to certain genders or races, depending on the types of phrases used, Gizmodo noted.

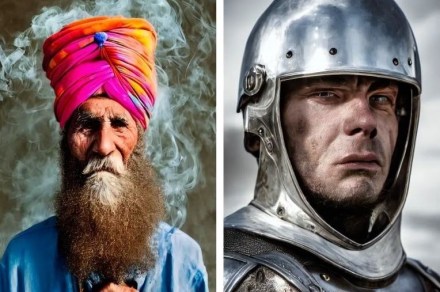

What's the difference between these two groups of people? Well, according to Stable Diffusion, the first group represents an 'ambitious CEO' and the second a 'supportive CEO'.

I made a simple tool to explore biases ingrained in this model: https://t.co/l4lqt7rTQj pic.twitter.com/xYKA8w3N8N— Dr. Sasha Luccioni 💻🌎✨ (@SashaMTL) October 31, 2022

Sasha Luccioni, artificial intelligence researcher for Hugging Face, created a tool that demonstrates how the AI bias in text-to-art generators works in action. Using the Stable Diffusion Explorer as an example, inputting the phrase “ambitious CEO” garnered results for different types of men, while the phrase “supportive CEO” gave results that showed both men and women.

Similarly, the DALL-E 2 generator, which was created by the brand OpenAI has shown male-centric biases for the term “builder” and female-centric biases for the term “flight attendant” in image results, despite there being female builders and male flight attendants.

While many AI image generators appear to just take a few words and machine learning and out pops an image, there is a lot more that goes on in the background. Stable Diffusion, for example, uses the LAION image set, which hosts “billions of pictures, photos, and more scraped from the internet, including image-hosting and art sites,” Gizmodo noted.

Racial and cultural bias in online image searches has already been an ongoing topic long before the increasing popularity of AI image generators. Luccioni told the publication that systems, such as the LAION dataset ,are likely to home in on 90% of the images related to a prompt and use it for the image generator.

Editors’ Recommendations