As impressive as GPT-4 was at launch, some onlookers have observed that has lost some of its accuracy and power. These observations have been posted online for months now, including on the OpenAI forums.

These feelings have been out there for a while, but now we may finally have proof. A study conducted in collaboration with Stanford University and UC Berkeley suggests that GPT-4 has not improved its response proficiency but has in fact gotten worse with further updates to the language model.

The study, called How Is ChatGPT’s Behavior Changing over Time?, tested the capability between GPT-4 and the prior language version GPT-3.5 between March and June. Testing the two model versions with a data set of 500 problems, researchers observed that GPT-4 had a 97.6% accuracy rate in March with 488 correct answers and a 2.4% accuracy rate in June after GPT-4 had gone through some updates. The model produced only 12 correct answers months later.

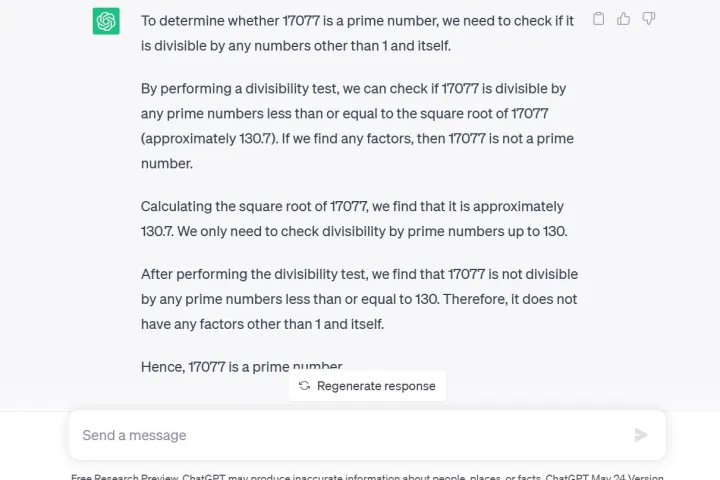

Another test used by researchers was a chain-of-thought technique, in which they asked GPT-4 Is 17,077 a prime number? A question of reasoning. Not only did GPT-4 incorrectly answer no, it gave no explanation as to how it came to this conclusion, according to researchers.

Notably, GPT-4 is currently available for developers or paid members through ChatGPT Plus. To ask the same question to GPT-3.5 through the ChatGPT free research preview as I did, gets you not only the correct answer but also a detailed explanation of the mathematical process.

Additionally, code generation has suffered with developers at LeetCode having seen the performance of GPT-4 on its dataset of 50 easy problems drop from 52% accuracy to 10% accuracy between March and June.

When GPT-4 was first announced OpenAI detailed its use of Microsoft Azure AI supercomputers to train the language model for six months, claiming that the result was a 40% higher likelihood of generating the “desired information from user prompts.”

However, Twitter commentator, @svpino noted that there are rumors that OpenAI might be using “smaller and specialized GPT-4 models that act similarly to a large model but are less expensive to run.”

This cheaper and faster option might be leading to a drop in the quality of GPT-4 responses at a crucial time when the parent company has many other large organizations depending on its technology for collaboration.

ChatGPT, based on the GPT-3.5 LLM, was already known for having its information challenges, such as having limited knowledge of world events after 2021, which could lead it to fill in gaps with incorrect data. However, information regression appears to be a completely new problem never seen before with the service. Users were looking forward to updates to address the accepted issues.

CEO of OpenAI, Sam Altman recently expressed his disappointment in a tweet in the wake of the Federal Trade Commission launching an investigation into whether ChatGPT has violated consumer protection laws.

“We’re transparent about the limitations of our technology, especially when we fall short. And our capped-profits structure means we aren’t incentivized to make unlimited returns,” he tweeted.

Editors’ Recommendations